Use Cases

All links lead to corresponding terms in Glossary

I want to extract contact data of travel related companies

Go to New Session dialog.

In Data Source section select Search Engines.

Enter «travel» in Keywords box.

In Extraction Data section select what type of data you want to extract: email, phone, fax, etc.

Press Start button and wait until session is finished or press Pause/Stop button.

Then press Save icon in the right upper corner to open File Export dialog.

Browse the file where program will save the data and select further configuration.

I want to form a complete list of motor boats with descriptions from Ebay and contact data

I want to extract contact data of travel related companies of Australia

Go to New Session dialog.

In Data Source section select Search Engines.

Enter «travel» in Keywords box.

In Extraction Data section select what type of data you want to extract: email, phone, fax, etc.

Press Start button and wait until session is finished or press Pause/Stop button.

Then press Save icon in the right upper corner to open File Export dialog.

Browse the file where program will save the data and select further configuration.

Press Save button.

I want to get more data of travel related companies of Australia

Repeat previous steps except that use more keywords like

- travel;

- hotel;

- cruise.

I want to build a domain list of health/medicine related websites

Go to New Session dialog.

In Data Source section select Search Engines.

In Keywords box enter the following keywords:

- health;

- medicine, etc.

-

In Extraction Data section select what type of data you want to extract: URLs.

Press Start button and wait until session is finished or press Pause/Stop button.

Then press Save icon in the right upper corner to open File Export dialog.

Browse the file where program will save the data and select further configuration.

Press Save button.

I want to extract all data from the website http://www.mydomain.com

Go to New Session dialog.

In Data Source section select Site.

Enter website URL in Start URL box (http://www.mydomain.com).

Select maximal depth = 10.

Select what type of data you want to extract: email, phone, fax, etc.

Press Start button and wait until session is finished or press Pause/Stop button.

Then press Save icon in the right upper corner to open File Export dialog.

Browse the file where program will save the data and select further configuration.

Press Save button.

I have a list of URLs in a file and I want to extract data from those URLs

Go to New Session dialog.

In Data Source section select URL List.

Browse the file containing list of URLs. This must be plain text file with one URL per line, each line starting with http://.

Select process One Page Only to spider only the specified URL or select Depth = 10 for entire extraction of each website in the text file.

In Extraction Data section select what type of data you wish to extract: email, phone, fax, etc.

Press Start button and wait until session is finished or press Pause/Stop button.

Then press Save icon in the right upper corner to open File Export dialog.

Browse the file where program will save the data and select further configuration.

Press Save button.

I want to extract all contact data from Yahoo! Directory/Photographers to send them invitation to visit my new photographer forum

Go to New Session dialog.

In Data Source section select Site.

Enter website URL in Start URL box (http://dir.yahoo.com/Arts/Visual_Arts/Photography/Photographers/).

Select depth = Offsite.

Then select what type of data you want to extract: email, phone, fax, etc.

Press Start button and wait until session is finished or press Pause/Stop button.

Then press Save icon in the right upper corner to open File Export dialog.

Browse the file where program will save the data and select further configuration.

Press Save button.

I want to extract phone/fax numbers of real estate companies of Canada, Toronto area

Go to New Session dialog.

In Data Source section press Search Engines radio button.

Then press Select Search Engines button and use drop-down list to select the country (Canada).

Choose search engines from the list below. By default US/international engines are selected.

Enter «real estate» in Keywords box.

In Extraction Data section select type of data you wish to extract: phones, faxes.

Now go to Phone/Fax Filter and enter in both boxes:

- 416;

- 647;

so that WDE will extract only those phone/fax numbers that contain Toronto area code.

Press Start button and wait until session is finished or press Pause/Stop button.

Then press Save icon in the right upper corner to open File Export dialog.

Browse the file where program will save the data and select further configuration.

Press Save button.

I want to compile a list of offshore, banking, tax, accounting related websites that do link exchange with other sites

Go to New Session dialog.

In Data Source section select Search Engines.

In Keywords box generate keywords using following 2 lists:

- offshore banking tax accounting;

- link exchange trade links swap link add url;

In Extraction Data section select the type of data you need to extract: meta tags and emails.

Now go to URL Filters → Page must contain the following text to extract data. Enter the following strings in the box:

- links.html

- link.html

- resource.html

- add url

- submit url

- add your site

- submit your site

So that WDE will extract data from only those websites who do link exchange or add URLs to their directories.

Press Start button and wait until session is finished or press Pause/Stop button.

After extraction completed, go to Results → Meta Tag tab. These are Titles, Descriptions and other information on websites that do link exchange with other sites.

Then press Save icon in the right upper corner to open File Export dialog.

Browse the file where program will save the data and select further configuration.

Press Save button.

I have URL list in SQL database. I want to extract URLs, titles, descriptions, keywords and merge them into database

WDE cannot access SQL database. First you need to export URL list from SQL database to a plain text file and then use it in WDE.

Go to New Session dialog.

In Data Source section select URL List.

Browse the file containing list of URLs. This must be plain text file with one URL per line, each line starting with http://.

Select depth = One Page Only to extract meta tags only of specified root domains. If you need to extract meta tags of all pages of each website then select maximal depth.

In Extraction Data section select what type of data you wish to extract: meta tags.

Press Start button and wait until session is finished or press Pause/Stop button.

Then press Save icon in the right upper corner to open File Export dialog.

Browse the file where program will save the data and select further configuration.

Press Save button.

Now you can import this CSV file to SQL databse.

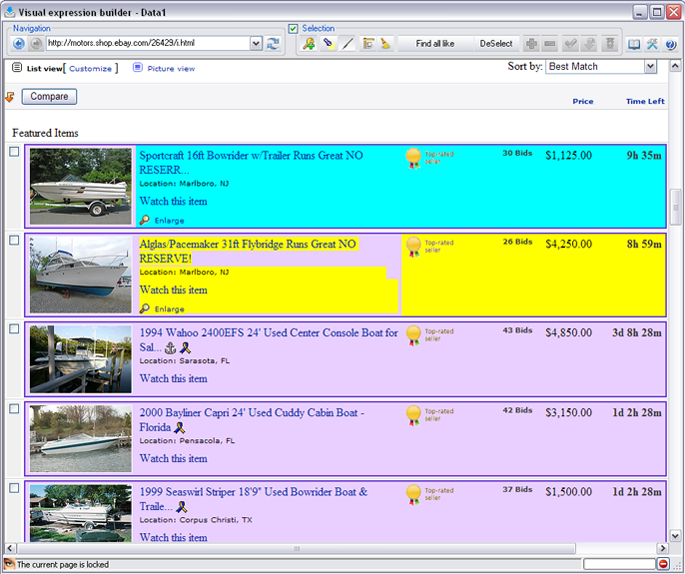

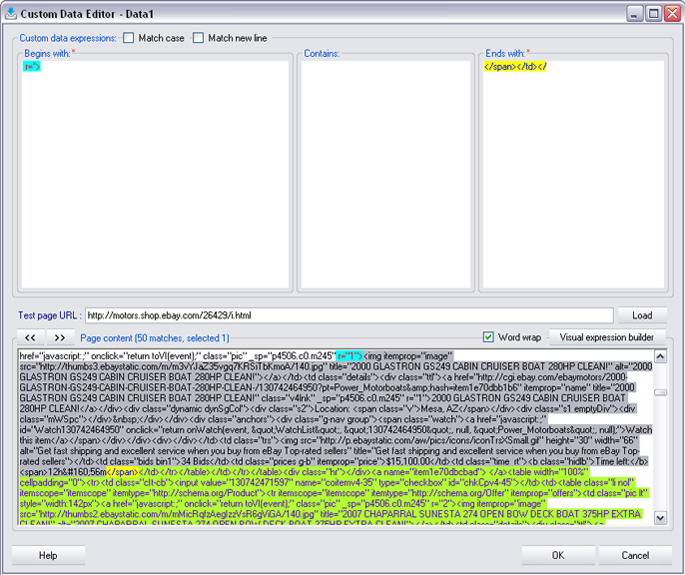

I want to form a complete list of motor boats with descriptions from Ebay

Go to New Session dialog.

In Data Source section select Site.

Enter website URL in Start URL box (http://motors.shop.ebay.com/Boats-/26429/i.html)

http://motors.shop.ebay.com/Boats-/26429/i.html*pgn=*

In Extraction Data section check Custom data to enable Custom Data Expressions section.

Enter Custom Data Editor.

Then enter Visual Expression Builder.

Using Information Container Backlight tool choose two items of information that have similar structure.

The program builds a pattern expression on account of the two items you've selected.

Optimize the expression if you need WDE to work faster or apply it without optimization.

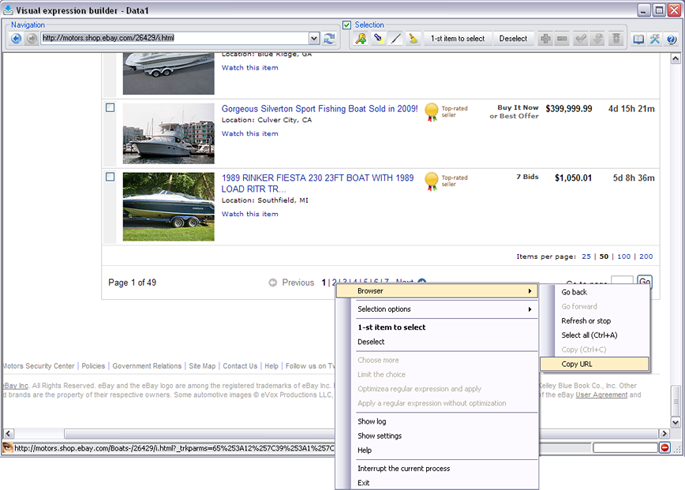

(Option:

If you want WDE to search only for information concerning boats (that is only pages listed in the bottom) and not any other products placed on Ebay, you shoud set the URL filter.

For this purpose hover a cursor over any similar page link and choose Browser → Copy URL.

Then place this URL into URL filter → URL must contain keywords. Change the part containing a page number (that is common for all such links) to “*” (which replaces any amount of any symbols).

)

On the basis of this fragments of an expression WDE will search for further information.

Press OK button.

Select maximal depth if you want the entire site to be spidered.

Press Start button and wait until session is finished or press Pause/Stop button.

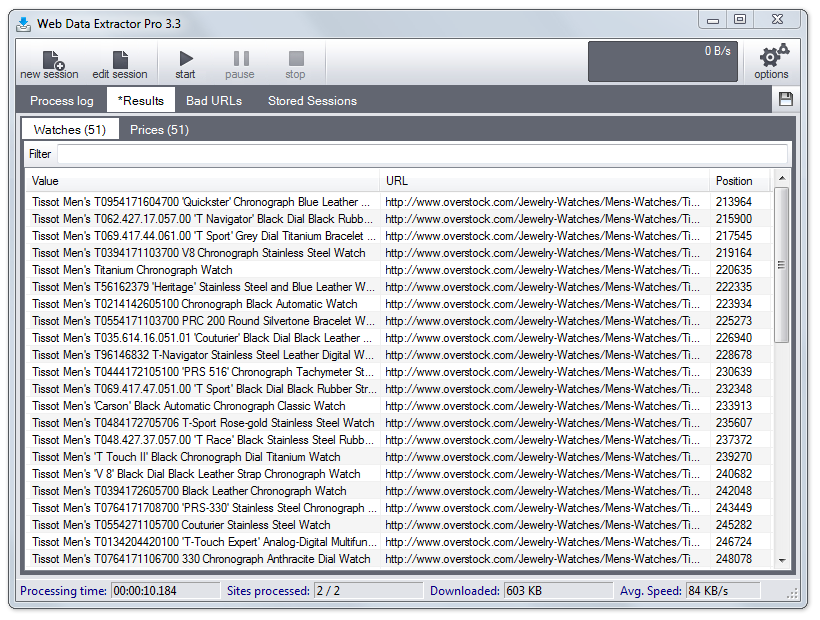

After extraction completed, go to Results → Data 1. This is all information on the website that matches your pattern expression.

Press Save icon in the right upper corner to open File Export dialog.

Browse the file where program will save the data and select further configuration.

Press Save button.

I want to form a complete list of motor boats with descriptions from Ebay and contact data

Repeat all previous steps except that in Extraction Data section select what type of data you want to extract: email, phone, fax, etc.

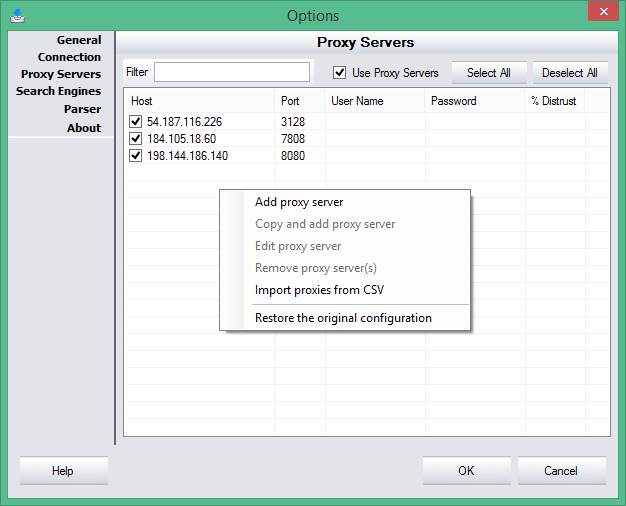

I want to use proxy servers' list, how it works?

List of urls looks like:

The column "% Distrust" could be empty or have from 10% to 100%

When you use the program, it disperses requests to proxy serves equally from this list.

The column "% Distrust" is filling up to 100%, when you have connection error, and then it dispatches a request to the next proxy server from the list.

Every protocol error increases value from 0 to 90% and request is going to the next proxy server from the list, which has less percentage of distrust or is equal in percentage to the running proxy server.

All other errors (the most common is an error in getting answer, which happens in temporary reloading of proxy server) do not increase distrust percentage and request just goes to the next proxy server from the list, which has less distrust percentage or is equal to the running proxy server.

If all proxy servers get 100% of distrust, all requests will break in with conforming error in “Bad Urls” list telling, that there are no more running proxy servers and the session is finished.

Column value "% Distrust" is filling according to results of the last run session.

If new session starts, all proxy servers get zero "% Distrust", i. e. full trust, and statistics is assembling from zero.

According to the session results, it is possible to delete from the list proxy servers with 100% of distrust - apparently, these proxy servers are really idle.

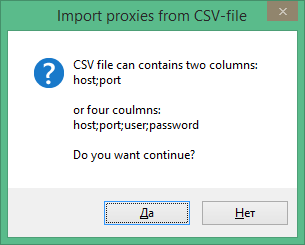

Proxy list can be imported from CSV file, which can consists of 2 or 4 columns and the user is notified about this.